42. Robustness#

Contents

42.1. Overview#

This lecture modifies a Bellman equation to express a decision maker’s doubts about transition dynamics.

His specification doubts make the decision maker want a robust decision rule.

Robust means insensitive to misspecification of transition dynamics.

The decision maker has a single approximating model.

He calls it approximating to acknowledge that he doesn’t completely trust it.

He fears that outcomes will actually be determined by another model that he cannot describe explicitly.

All that he knows is that the actual data-generating model is in some (uncountable) set of models that surrounds his approximating model.

He quantifies the discrepancy between his approximating model and the genuine data-generating model by using a quantity called entropy.

(We’ll explain what entropy means below)

He wants a decision rule that will work well enough no matter which of those other models actually governs outcomes.

This is what it means for his decision rule to be “robust to misspecification of an approximating model”.

This may sound like too much to ask for, but

The secret weapon is max-min control theory.

A value-maximizing decision maker enlists the aid of an (imaginary) value-minimizing model chooser to construct bounds on the value attained by a given decision rule under different models of the transition dynamics.

The original decision maker uses those bounds to construct a decision rule with an assured performance level, no matter which model actually governs outcomes.

Note

In reading this lecture, please don’t think that our decision maker is paranoid when he conducts a worst-case analysis. By designing a rule that works well against a worst-case, his intention is to construct a rule that will work well across a set of models.

42.1.1. Sets of Models Imply Sets Of Values#

Our “robust” decision maker wants to know how well a given rule will work when he does not know a single transition law

Ultimately, he wants to design a decision rule

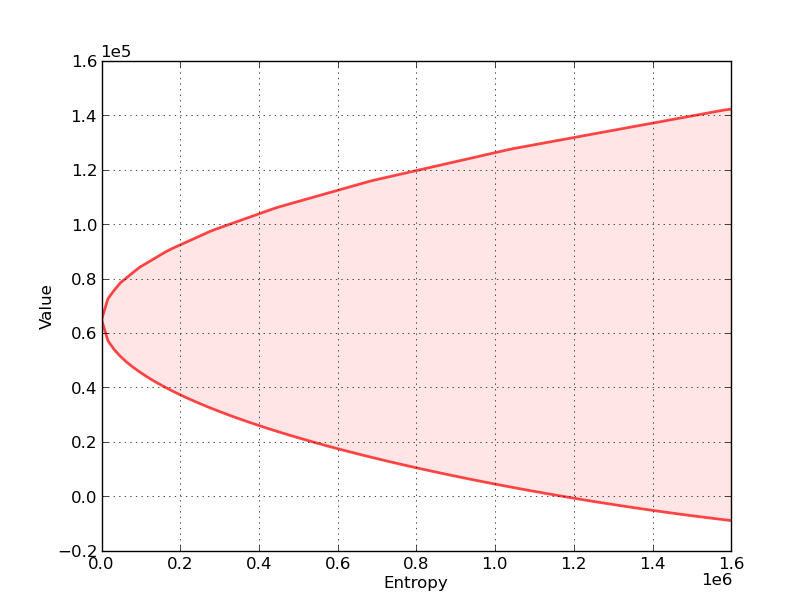

With this in mind, consider the following graph, which relates to a particular decision problem to be explained below

The figure shows a value-entropy correspondence for a particular decision rule

The shaded set is the graph of the correspondence, which maps entropy to a set of values associated with a set of models that surround the decision maker’s approximating model.

Here

Value refers to a sum of discounted rewards obtained by applying the decision rule

Entropy is a nonnegative number that measures the size of a set of models surrounding the decision maker’s approximating model.

Entropy is zero when the set includes only the approximating model, indicating that the decision maker completely trusts the approximating model.

Entropy is bigger, and the set of surrounding models is bigger, the less the decision maker trusts the approximating model.

The shaded region indicates that for all models having entropy less than or equal to the number on the horizontal axis, the value obtained will be somewhere within the indicated set of values.

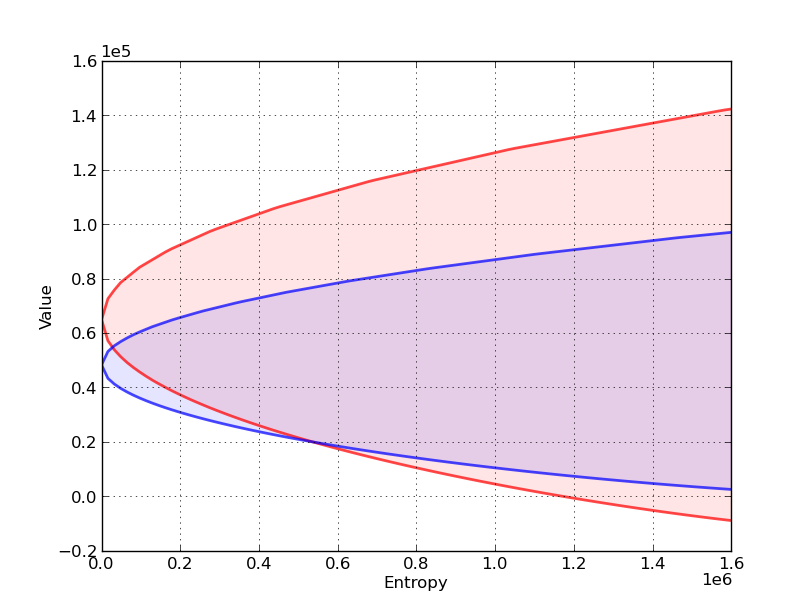

Now let’s compare sets of values associated with two different decision rules,

In the next figure,

The red set shows the value-entropy correspondence for decision rule

The blue set shows the value-entropy correspondence for decision rule

The blue correspondence is skinnier than the red correspondence.

This conveys the sense in which the decision rule

more robust means that the set of values is less sensitive to increasing misspecification as measured by entropy.

Notice that the less robust rule

(But it is more fragile in the sense that it is more sensitive to perturbations of the approximating model)

Below we’ll explain in detail how to construct these sets of values for a given

Here is a hint about the secret weapons we’ll use to construct these sets

We’ll use some min problems to construct the lower bounds.

We’ll use some max problems to construct the upper bounds.

We will also describe how to choose

This will involve crafting a skinnier set at the cost of a lower level (at least for low values of entropy).

42.1.2. Inspiring Video#

If you want to understand more about why one serious quantitative researcher is interested in this approach, we recommend Lars Peter Hansen’s Nobel lecture.

42.1.3. Other References#

Our discussion in this lecture is based on

using LinearAlgebra, Statistics

42.2. The Model#

For simplicity, we present ideas in the context of a class of problems with linear transition laws and quadratic objective functions.

To fit in with our earlier lecture on LQ control, we will treat loss minimization rather than value maximization.

To begin, recall the infinite horizon LQ problem, where an agent chooses a sequence of controls

subject to the linear law of motion

As before,

Here

For now we take

We also allow for model uncertainty on the part of the agent solving this optimization problem.

In particular, the agent takes

As a consequence, she also considers a set of alternative models expressed in terms of sequences

She seeks a policy that will do well enough for a set of alternative models whose members are pinned down by sequences

Soon we’ll quantify the quality of a model specification in terms of the maximal size of the expression

42.3. Constructing More Robust Policies#

If our agent takes

(Here

Our tool for studying robustness is to construct a rule that works well even if an adverse sequence

In our framework, “adverse” means “loss increasing”.

As we’ll see, this will eventually lead us to construct the Bellman equation.

Notice that we’ve added the penalty term

Since

The penalty parameter

By raising

So bigger

42.3.1. Analyzing the Bellman equation#

So what does

As with the ordinary LQ control model,

One of our main tasks will be to analyze and compute the matrix

Related tasks will be to study associated feedback rules for

First, using matrix calculus, you will be able to verify that

where

and

Using similar mathematics, the solution to this minimization problem is

Substituting this minimizer back into (42.6) and working through the algebra gives

where

The operator

Under some regularity conditions (see [HS08]), the operator

A robust policy, indexed by

We also define

The interpretation of

Note that

Note also that if

Hence, when

Furthermore, when

Conversely, smaller

42.4. Robustness as Outcome of a Two-Person Zero-Sum Game#

What we have done above can be interpreted in terms of a two-person zero-sum game in which

Agent 1 is our original agent, who seeks to minimize loss in the LQ program while admitting the possibility of misspecification.

Agent 2 is an imaginary malevolent player.

Agent 2’s malevolence helps the original agent to compute bounds on his value function across a set of models.

We begin with agent 2’s problem.

42.4.1. Agent 2’s Problem#

Agent 2

knows a fixed policy

responds by choosing a shock sequence

A natural way to say “sufficiently close to the zero sequence” is to restrict the

summed inner product

However, to obtain a time-invariant recursive formulation, it turns out to be convenient to restrict a discounted inner product

Now let

Substituting

where

and the initial condition

Agent 2 chooses

Using a Lagrangian formulation, we can express this problem as

where

For the moment, let’s take

or, equivalently,

subject to (42.11).

What’s striking about this optimization problem is that it is once again an LQ discounted dynamic programming problem, with

The expression for the optimal policy can be found by applying the usual LQ formula (see here).

We denote it by

The remaining step for agent 2’s problem is to set

Here

42.4.2. Using Agent 2’s Problem to Construct Bounds on the Value Sets#

42.4.2.1. The Lower Bound#

Define the minimized object on the right side of problem (42.12) as

Because “minimizers minimize” we have

where

This inequality in turn implies the inequality

where

The left side of inequality (42.14) is a straight line with slope

Technically, it is a “separating hyperplane”.

At a particular value of entropy, the line is tangent to the lower bound of values as a function of entropy.

In particular, the lower bound on the left side of (42.14) is attained when

To construct the lower bound on the set of values associated with all perturbations

For a given

Compute the minimizer

Compute the lower bound on the value function

Repeat the preceding three steps for a range of values of

Note

This procedure sweeps out a set of separating hyperplanes indexed by different values for the Lagrange multiplier

42.4.2.2. The Upper Bound#

To construct an upper bound we use a very similar procedure.

We simply replace the minimization problem (42.12) with the maximization problem.

where now

(Notice that

Because “maximizers maximize” we have

which in turn implies the inequality

where

The left side of inequality (42.17) is a straight line with slope

The upper bound on the left side of (42.17) is attained when

To construct the upper bound on the set of values associated all perturbations

42.4.2.3. Reshaping the set of values#

Now in the interest of reshaping these sets of values by choosing

42.4.3. Agent 1’s Problem#

Now we turn to agent 1, who solves

where

In other words, agent 1 minimizes

subject to

Once again, the expression for the optimal policy can be found here — we denote

it by

42.4.4. Nash Equilibrium#

Clearly the

Holding all other parameters fixed, we can represent this relationship as a mapping

The map

agent 1 uses an arbitrary initial policy

agent 2 best responds to agent 1 by choosing

agent 1 best responds to agent 2 by choosing

As you may have already guessed, the robust policy

In particular, for any given

A sketch of the proof is given in the appendix.

42.5. The Stochastic Case#

Now we turn to the stochastic case, where the sequence

In this setting, we suppose that our agent is uncertain about the conditional probability distribution of

The agent takes the standard normal distribution

These alternative conditional distributions of

To implement this idea, we need a notion of what it means for one distribution to be near another one.

Here we adopt a very useful measure of closeness for distributions known as the relative entropy, or Kullback-Leibler divergence.

For densities

Using this notation, we replace (42.3) with the stochastic analogue

Here

The distribution

This penalty term plays a role analogous to the one played by the deterministic penalty

42.5.1. Solving the Model#

The maximization problem in (42.22) appears highly nontrivial — after all, we are maximizing over an infinite dimensional space consisting of the entire set of densities.

However, it turns out that the solution is tractable, and in fact also falls within the class of normal distributions.

First, we note that

Moreover, it turns out that if

where

and the maximizer is the Gaussian distribution

Substituting the expression for the maximum into Bellman equation

(42.22) and using

Since constant terms do not affect minimizers, the solution is the same as (42.6), leading to

To solve this Bellman equation, we take

In addition, we take

The robust policy in this stochastic case is the minimizer in

(42.25), which is once again

Substituting the robust policy into (42.24) we obtain the worst case shock distribution:

where

Note that the mean of the worst-case shock distribution is equal to the same worst-case

42.5.2. Computing Other Quantities#

Before turning to implementation, we briefly outline how to compute several other quantities of interest.

42.5.2.1. Worst-Case Value of a Policy#

One thing we will be interested in doing is holding a policy fixed and computing the discounted loss associated with that policy.

So let

Writing

To solve this we take

and

If you skip ahead to the appendix, you will be able to

verify that

42.6. Implementation#

The QuantEcon.jl package provides a type called RBLQ for implementation of robust LQ optimal control.

The code can be found on GitHub.

Here is a brief description of the methods of the type

d_operator()andb_operator()implementrobust_rule()androbust_rule_simple()both solve for the triplerobust_rule()is more efficientrobust_rule_simple()is more transparent and easier to follow

K_to_F()andF_to_K()solve the decision problems of agent 1 and agent 2 respectivelycompute_deterministic_entropy()computes the left-hand side of (42.13)evaluate_F()computes the loss and entropy associated with a given policy — see this discussion

42.7. Application#

Let us consider a monopolist similar to this one, but now facing model uncertainty.

The inverse demand function is

where

and all parameters are strictly positive.

The period return function for the monopolist is

Its objective is to maximize expected discounted profits, or, equivalently, to minimize

To form a linear regulator problem, we take the state and control to be

Setting

For the transition matrices we set

Our aim is to compute the value-entropy correspondences shown above.

The parameters are

The standard normal distribution for

We compute value-entropy correspondences for two policies.

The no concern for robustness policy

A “moderate” concern for robustness policy

The code for producing the graph shown above, with blue being for the robust policy, is as follows

using QuantEcon, Plots, LinearAlgebra, Interpolations

# model parameters

a_0 = 100

a_1 = 0.5

rho = 0.9

sigma_d = 0.05

beta = 0.95

c = 2

gamma = 50.0

theta = 0.002

ac = (a_0 - c) / 2.0

# Define LQ matrices

R = [0 ac 0;

ac -a_1 0.5;

0.0 0.5 0]

R = -R # For minimization

Q = Matrix([gamma / 2.0]')

A = [1.0 0.0 0.0;

0.0 1.0 0.0;

0.0 0.0 rho]

B = [0.0 1.0 0.0]'

C = [0.0 0.0 sigma_d]'

## Functions

function evaluate_policy(theta, F)

rlq = RBLQ(Q, R, A, B, C, beta, theta)

K_F, P_F, d_F, O_F, o_F = evaluate_F(rlq, F)

x0 = [1.0 0.0 0.0]'

value = -x0' * P_F * x0 .- d_F

entropy = x0' * O_F * x0 .+ o_F

return value[1], entropy[1] # return scalars

end

function value_and_entropy(emax, F, bw, grid_size = 1000)

if lowercase(bw) == "worst"

thetas = 1 ./ range(1e-8, 1000, length = grid_size)

else

thetas = -1 ./ range(1e-8, 1000, length = grid_size)

end

data = zeros(grid_size, 2)

for (i, theta) in enumerate(thetas)

data[i, :] = collect(evaluate_policy(theta, F))

if data[i, 2] >= emax # stop at this entropy level

data = data[1:i, :]

break

end

end

return data

end

## Main

# compute optimal rule

optimal_lq = QuantEcon.LQ(Q, R, A, B, C, zero(B'A), bet = beta)

Po, Fo, Do = stationary_values(optimal_lq)

# compute robust rule for our theta

baseline_robust = RBLQ(Q, R, A, B, C, beta, theta)

Fb, Kb, Pb = robust_rule(baseline_robust)

# Check the positive definiteness of worst-case covariance matrix to

# ensure that theta exceeds the breakdown point

test_matrix = I - (C' * Pb * C ./ theta)[1]

eigenvals, eigenvecs = eigen(test_matrix)

@assert all(x -> x >= 0, eigenvals)

emax = 1.6e6

# compute values and entropies

optimal_best_case = value_and_entropy(emax, Fo, "best")

robust_best_case = value_and_entropy(emax, Fb, "best")

optimal_worst_case = value_and_entropy(emax, Fo, "worst")

robust_worst_case = value_and_entropy(emax, Fb, "worst")

# we reverse order of "worst_case"s so values are ascending

data_pairs = ((optimal_best_case, optimal_worst_case),

(robust_best_case, robust_worst_case))

egrid = range(0, emax, length = 100)

egrid_data = []

for data_pair in data_pairs

for data in data_pair

x, y = data[:, 2], data[:, 1]

curve = LinearInterpolation(x, y, extrapolation_bc = Line())

push!(egrid_data, curve.(egrid))

end

end

plot(egrid, egrid_data, color = [:red :red :blue :blue])

plot!(egrid, egrid_data[1], fillrange = egrid_data[2],

fillcolor = :red, fillalpha = 0.1, color = :red, legend = :none)

plot!(egrid, egrid_data[3], fillrange = egrid_data[4],

fillcolor = :blue, fillalpha = 0.1, color = :blue, legend = :none)

plot!(xlabel = "Entropy", ylabel = "Value")

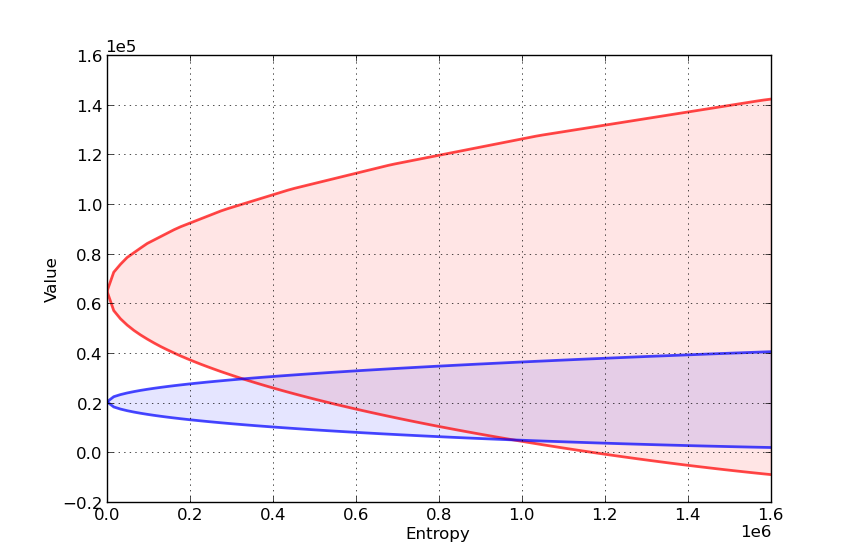

Here’s another such figure, with

Can you explain the different shape of the value-entropy correspondence for the robust policy?

42.8. Appendix#

We sketch the proof only of the first claim in this section,

which is that, for any given

This is the content of the next lemma.

Lemma. If

Proof: As a first step, observe that when

(revisit this discussion if you don’t know where (42.29) comes from) and the optimal policy is

Suppose for a moment that

In this case the policy becomes

which is exactly the claim in (42.28).

Hence it remains only to show that

Using the definition of

Although it involves a substantial amount of algebra, it can be shown that the

latter is just

(Hint: Use the fact that